Transforming the Building Insurance Industry with Data Engineering

In property underwriting, insurance carriers and real estate firms wield a powerful tool – data. At the heart of their decision-making process lies a treasure trove of information that delves into the property’s history. This data-driven approach is pivotal for commercial underwriting purposes, allowing these key players to make well-informed choices regarding coverage and risk assessment.

We will explore why and how property permits and the condition of a property shaping domain of insurance to the next level:

Property history serves as the canvas upon which insurance carriers evaluate risk. Whether a property is well-maintained or has seen numerous modifications significantly impacts insurance decisions. Property permits offer insights into a property’s adherence to local regulations and safety standards. Insurance companies scrutinize these permits to assess potential liabilities and non-compliance issues. Property underwriting is assessing a property’s risk for insurance purposes. This assessment guides insurance carriers in setting appropriate premiums. When evaluating a property for underwriting, carriers consider various components related to building permits beyond just the roof.

Significant structural changes, such as additions or extensions, are evaluated for their impact on a property’s integrity and safety. The condition and compliance of plumbing and electrical systems are assessed, as issues in these areas can lead to hazards like fires or water damage. Compliance with local building codes and safety regulations is crucial. Non-compliance can raise risks and affect insurance coverage and premiums.

The roof is a crucial focus. Insurance carriers consider the roof’s age and condition when assessing risk. A newer roof, as indicated by a recent permit, suggests lower risk, while an older permit may indicate a need for repairs or replacement, impacting insurance terms and premiums.

Data Engineering for Insurance Domain

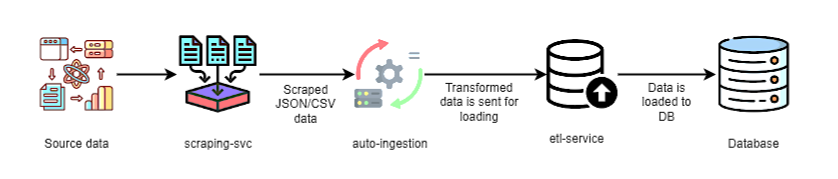

Data engineering is really important for handling and improving building permit data. It involves ETL, which stands for extracting, transforming, and loading. This process takes a large and varied set of data and makes it clean, standardized, and ready for analysis. Data engineering ensures that the data is of good quality and brings together information from different places, like government databases and local authorities, into one place, like a big cloud storage.

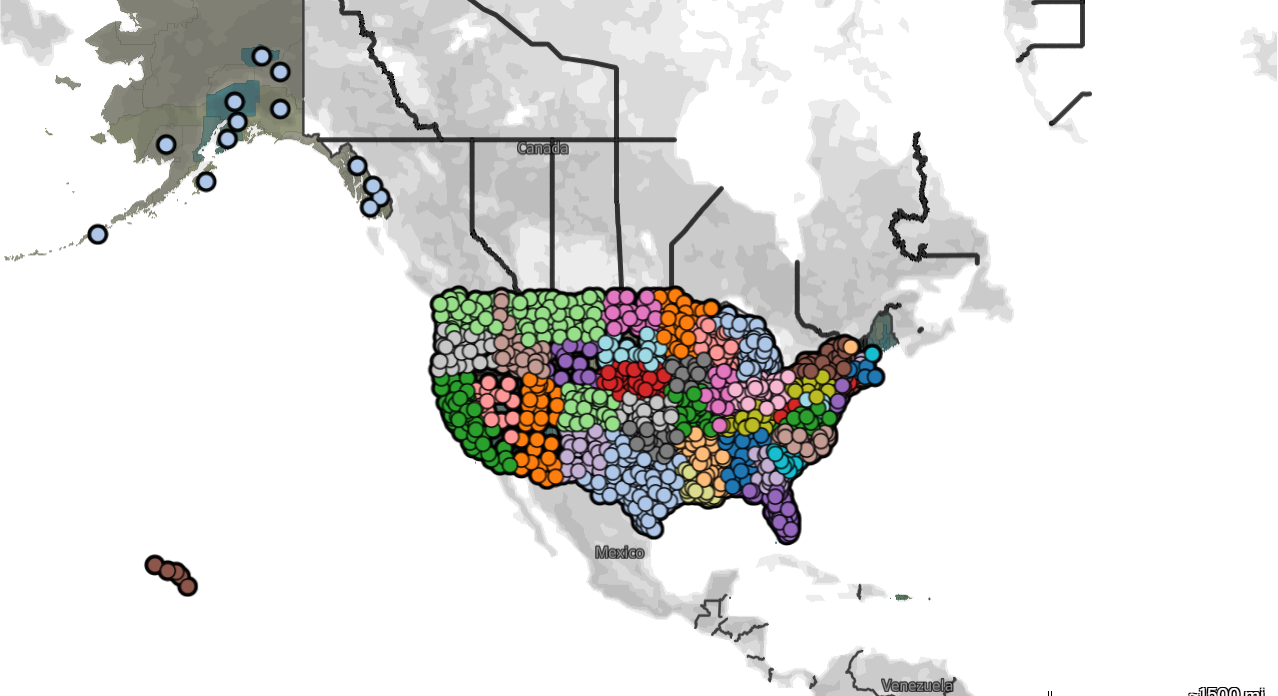

The reason why data engineering is so crucial for building permit data is that it helps people like insurance companies, real estate firms, and construction contractors to do their jobs better. It allows us to quickly and correctly analyze the data. It also sets up a way to manage big amounts of data from all 52 states in the U.S. It keeps the data safe and makes it easy for us to use in making important decisions.

The pipeline streamlines collecting, processing, and managing building permit data for the US Insurance industry. It emphasizes automation, data quality, and efficient data retrieval to support insurance companies in making informed decisions related to property assessments and risk management.

Certainly, let’s delve into the features of this Data Engineering Pipeline:

Efficient Data Scraping

This mechanism allows for the concurrent execution of tasks without continuous manual oversight. The multiple scraping tasks can run simultaneously, improving efficiency and speed. The pipeline is scraping permit data from different counties or cities across multiple states and ingesting it in the DB. Data scraping is optimized to complete within 4 minutes when the data is available at the source. For example, if a user requests permit data for a specific address, the pipeline will check if the data is available at the source (e.g., a municipal website) and, if so, scrape the relevant information within 4 minutes.

Geocoding with High Accuracy

The pipeline accurately associates geographic coordinates with property addresses. For example, if a permit data source provides address information, the pipeline can accurately determine the latitude and longitude coordinates for each property. This enables precise mapping and spatial analysis.

Better Data Management

To ensure data quality, the pipeline prevents the entry of duplicate data using a combination of three business features and OLAP CTID mechanisms. If the same permit data is accidentally scraped multiple times, the pipeline detects and eliminates duplicates based on unique criteria, ensuring a clean and reliable dataset.

The pipeline regularly backs up data to the cloud, ensuring data preservation and availability. In the event of a system failure or data loss, the pipeline can retrieve the last backup to restore missing or corrupted data, maintaining data integrity.

Application of the Data at Business

Contractors

Contractors use data analysis to optimize their services. They analyze locality data, including building permits, to gauge demand in specific areas. By mapping customers to localities using geospatial technology, they can identify opportunities for tailored services and upselling. Contractors proactively target high-permit areas, increasing customer engagement and meeting active market needs. This data-driven approach guides resource allocation, marketing, and decision-making, resulting in increased efficiency and profitability. Data analysis also helps track the success of initiatives, enabling ongoing strategy refinement and operational optimization.

Real Estate

Real estate firms leverage property data to assess a property’s condition, incorporating details like age, maintenance, and renovations to determine its value and listing price. A well-maintained property may command a higher price, while those in need of repairs adjust accordingly, ensuring accurate pricing. Additionally, customer data helps real estate companies provide personalized service. By utilizing client preferences and transaction history, they offer tailored property recommendations, aiding informed decision-making and building trust with clients.

In the insurance industry, a well-structured ETL pipeline is needed for accurate risk assessment, underwriting, and claims processing. So that insurance companies can make data-driven decisions, improve operational efficiency, and ensure compliance with regulatory requirements.

https://t.me/s/Official_1xbet_1xbet

https://t.me/s/rating_online

https://t.me/rating_online/6

https://t.me/s/rating_online/8

https://t.me/rating_online

https://t.me/rating_online/9

https://t.me/s/rating_online/5

https://t.me/s/rating_online/2

https://t.me/rating_online/7

https://t.me/Online_1_xbet/2481

https://t.me/Online_1_xbet/2008

https://t.me/Official_1xbet_1xbet/s/951

https://t.me/Official_1xbet_1xbet/s/472

https://t.me/Official_1xbet_1xbet/s/1132

https://t.me/Official_1xbet_1xbet/s/376

https://t.me/Official_1xbet_1xbet/s/1159

https://t.me/Official_1xbet_1xbet/s/103

https://t.me/Official_1xbet_1xbet/s/1110

https://t.me/Official_1xbet_1xbet/s/706

https://t.me/Official_1xbet_1xbet/s/1063

https://t.me/Official_1xbet_1xbet/s/450

https://t.me/Official_1xbet_1xbet/s/395

https://t.me/s/Official_1xbet_1xbet/1621

https://t.me/Official_1xbet_1xbet/1651

https://t.me/Official_1xbet_1xbet/1809

https://t.me/s/Official_1xbet_1xbet/1791

https://t.me/Official_1xbet_1xbet/1752

https://t.me/Official_1xbet_1xbet/1736

https://t.me/s/Official_1xbet_1xbet/1705

https://t.me/s/Official_1xbet_1xbet/1647

https://t.me/Official_1xbet_1xbet/1843

https://t.me/s/Official_1xbet_1xbet/1604

https://t.me/Official_1xbet_1xbet/1635

https://t.me/s/Official_1xbet_1xbet/1816

https://t.me/Official_1xbet_1xbet/1823

https://t.me/s/Official_1xbet_1xbet/1780

https://t.me/s/Official_1xbet_1xbet/1765

https://t.me/s/Official_1xbet_1xbet/1782

https://t.me/Official_1xbet_1xbet/1624

https://t.me/s/Official_1xbet_1xbet/1677

https://t.me/s/Official_1xbet_1xbet/1814

https://t.me/Official_1xbet_1xbet/1681

https://t.me/Official_1xbet_1xbet/1795

https://t.me/Official_1xbet_1xbet/1597

https://t.me/s/Official_1xbet_1xbet/1763

https://t.me/s/Official_1xbet_1xbet/1793

https://t.me/Official_1xbet_1xbet/1684

https://t.me/s/Official_1xbet_1xbet/1726

https://t.me/s/Official_1xbet_1xbet/1675

https://t.me/s/Official_1xbet_1xbet/1722

https://t.me/Official_1xbet_1xbet/1756

https://t.me/s/Official_1xbet_1xbet/1684

https://t.me/Official_1xbet_1xbet/1608

https://t.me/Official_1xbet_1xbet/1711

https://t.me/s/Official_1xbet_1xbet/1733

https://t.me/s/Official_1xbet_1xbet/1796

https://t.me/Official_1xbet_1xbet/1831

https://t.me/Official_1xbet_1xbet/1699

https://t.me/Official_1xbet_1xbet/1797

https://t.me/Official_1xbet_1xbet/1766

https://t.me/s/Official_1xbet_1xbet/1818

https://t.me/Official_1xbet_1xbet/1743

https://t.me/Official_1xbet_1xbet/1695

https://t.me/s/Official_1xbet_1xbet/1804

https://t.me/s/topslotov

[https://t.me/s/official_1win_aviator](https://t.me/s/official_1win_aviator)

https://t.me/reiting_top10_casino/6

https://t.me/reiting_top10_casino/9

https://t.me/reiting_top10_casino/3

https://t.me/s/reiting_top10_casino/8

https://t.me/reiting_top10_casino/8

https://t.me/s/reiting_top10_casino/4

https://t.me/s/reiting_top10_casino/3

https://t.me/reiting_top10_casino

https://t.me/reiting_top10_casino/10

https://t.me/reiting_top10_casino/7

https://t.me/reiting_top10_casino/5

https://t.me/s/reiting_top10_casino

https://t.me/s/reiting_top10_casino/5

https://t.me/s/reiting_top10_casino/7

https://t.me/s/reiting_top10_casino/6

https://t.me/s/reiting_top10_casino/2

https://t.me/reiting_top10_casino/4

https://t.me/s/reiting_top10_casino/9

https://t.me/s/Gaming_1xbet

https://t.me/s/PlayCasino_1xbet

https://t.me/s/PlayCasino_1win

https://t.me/s/PlayCasino_1xbet

https://t.me/s/PlayCasino_1win

https://t.me/s/ofitsialniy_1win/33/kes

https://t.me/s/iw_1xbet

https://t.me/s/ofitsialniy_1win

https://t.me/s/Official_beefcasino

https://t.me/bs_1xbet/37

https://t.me/s/bs_1xbet/27

https://t.me/s/bs_1xbet/40

https://t.me/s/bs_1xbet/14

https://t.me/bs_1xbet/9

https://t.me/s/bs_1xbet/42

https://t.me/bs_1xbet/24

https://t.me/bs_1xbet/48

https://t.me/bs_1xbet/38

https://t.me/s/bs_1xbet/40

https://t.me/bs_1xbet/11

https://t.me/bs_1xbet/7

https://t.me/s/bs_1xbet/20

https://t.me/bs_1xbet/31

https://t.me/s/bs_1xbet/8

https://t.me/s/bs_1xbet/50

https://t.me/bs_1xbet/46

https://t.me/s/bs_1xbet/32

https://t.me/bs_1xbet/14

https://t.me/s/bs_1xbet/26

https://t.me/bs_1xbet/6

https://t.me/s/bs_1xbet/12

https://t.me/s/bs_1xbet/22

https://t.me/s/bs_1xbet/35

https://t.me/s/bs_1xbet/34

https://t.me/s/jw_1xbet/933

https://t.me/jw_1xbet/485

https://t.me/s/jw_1xbet/898

https://t.me/jw_1xbet/569

https://t.me/jw_1xbet/355

https://t.me/jw_1xbet/226

https://t.me/s/bs_1Win/825

https://t.me/s/bs_1Win/1107

https://t.me/s/bs_1Win/1218

https://t.me/s/bs_1Win/879

https://t.me/s/bs_1Win/1091

https://t.me/bs_1Win/743

https://t.me/s/bs_1Win/429

https://t.me/s/bs_1Win/1009

https://t.me/s/bs_1Win/1074

https://t.me/s/bs_1Win/1157

https://t.me/s/bs_1Win/384

https://t.me/s/Official_mellstroy_casino/9

https://t.me/s/Official_mellstroy_casino/47

https://t.me/Beefcasino_rus/21

https://t.me/s/Official_mellstroy_casino/39

https://t.me/s/Official_mellstroy_casino/59

https://t.me/Official_mellstroy_casino/44

https://t.me/Official_mellstroy_casino/13

https://t.me/Official_mellstroy_casino/31

https://t.me/s/Official_mellstroy_casino/8

https://t.me/s/Official_mellstroy_casino/17

https://t.me/s/Official_mellstroy_casino/15

https://t.me/s/Official_mellstroy_casino/21

https://t.me/Best_promocode_rus/904

https://t.me/Best_promocode_rus/3472

https://t.me/Beefcasino_rus/57

https://t.me/ud_Pin_Up/54

https://t.me/s/?ud_1Go/58

https://t.me/ud_GGBet/49

https://t.me/s/ud_GGBet/48

https://t.me/s/ud_Legzo/50

https://t.me/s/ud_1xbet/56

https://t.me/s/ud_Izzi/63

https://t.me/?ud_1Go/63

https://t.me/s/ud_GGBet/58

https://t.me/s/ud_PlayFortuna/59

https://t.me/ud_Legzo/48

https://t.me/s/ud_Booi/50

https://t.me/s/ud_Flagman/62

https://t.me/ud_Kometa/61

https://t.me/ud_Riobet/51

https://t.me/s/Beefcasino_rus/59

https://t.me/ud_Leon/51

https://t.me/ud_Kometa/56

https://t.me/s/ud_Kometa/44

https://t.me/s/ud_Pin_Up/59

https://t.me/s/ud_Daddy/53

https://t.me/s/ud_Kent/47

https://t.me/s/ud_Martin/54

https://t.me/ud_Stake/46

https://t.me/ud_JoyCasino/62

https://t.me/s/ud_Gama/51

https://t.me/s/ud_Kent/24

https://t.me/s/ud_1Go/11

https://t.me/s/ud_Leon/36

https://t.me/s/ud_1Go

https://t.me/s/UD_PlAYfoRtuNA

https://t.me/s/UD_ROX

https://t.me/s/uD_ggbET

https://t.me/s/uD_stArda

https://t.me/s/Ud_IZZI

https://t.me/s/Ud_KEnT

https://t.me/s/uD_fRESH

https://t.me/s/official_1win_aviator/38

https://t.me/s/ud_poKERdoM

https://t.me/s/uD_leoN

https://t.me/s/official_1win_aviator/45

https://t.me/s/Ud_catcasINo

https://t.me/s/Ud_rIoBet

https://t.me/s/UD_lex

https://t.me/s/uD_1XBET

https://t.me/s/Official_mellstroy_casino

https://t.me/s/tf_1win

https://t.me/official_1win_aviator/67

https://t.me/s/kfo_1win

https://t.me/s/Top_bk_ru

https://t.me/s/uD_Izzi

https://t.me/s/ud_MRbIt

https://t.me/s/UD_sOL

https://t.me/s/Ud_CatCasINo

https://t.me/s/ud_1xSlOtS

https://t.me/s/UD_PInco

https://t.me/s/ud_jeT

https://t.me/s/UD_pIn_uP

https://t.me/s/ud_CAsiNo_X

https://t.me/s/ud_gIZbo

https://t.me/s/ud_1Go

https://t.me/s/Ud_LEX

https://t.me/s/kef_beef

https://t.me/s/ke_CatCasino

https://t.me/official_1win_aviator/267

https://t.me/s/ke_PlayFortuna

https://t.me/s/ke_Daddy

https://t.me/s/ke_mellstroy

https://t.me/s/ke_Volna

https://t.me/s/ke_Casino_X

https://t.me/s/ke_Drip

https://t.me/s/ke_Legzo

https://t.me/s/ke_Gama

https://t.me/s/ke_1Win

https://t.me/s/ke_kent

https://t.me/s/ke_GGBet

https://t.me/s/ke_Riobet

https://t.me/s/ke_DragonMoney

https://t.me/s/ke_Monro

https://t.me/s/ke_MrBit

https://t.me/s/ke_Stake

https://t.me/s/ke_1Go

https://t.me/s/ke_Gizbo

https://t.me/s/kef_Lex

https://t.me/s/ke_Pinco

https://t.me/s/ke_Vulkan

https://t.me/s/ke_JoyCasino

https://t.me/s/kef_Rox

https://t.me/s/ke_Fresh

https://t.me/s/ke_Flagman

https://t.me/s/ke_Kometa

https://t.me/s/ke_MostBet

https://t.me/official_1win_aviator/452

https://t.me/s/ke_Pin_Up

https://t.me/official_1win_aviator/32

https://t.me/s/ke_Sol

https://t.me/s/ke_Pokerdom

https://t.me/s/ke_Booi

https://t.me/s/kef_R7

https://t.me/s/ke_Vodka

https://t.me/s/top_kazino_z

https://t.me/s/topcasino_v_rossii

https://t.me/s/a_Top_onlinecasino/14

https://t.me/a_Top_onlinecasino/8

https://t.me/a_Top_onlinecasino/7

https://t.me/s/a_Top_onlinecasino/12

https://t.me/s/a_Top_onlinecasino/21

https://t.me/a_Top_onlinecasino/9

https://t.me/a_Top_onlinecasino/11

https://t.me/s/a_Top_onlinecasino/7

https://t.me/s/a_Top_onlinecasino/17

https://t.me/s/a_Top_onlinecasino/20

https://t.me/s/a_Top_onlinecasino/10

https://t.me/topcasino_rus/

https://t.me/s/official_DragonMoney_ed

https://t.me/s/official_Riobet_es

https://t.me/s/official_Vulkan_es

https://t.me/s/official_GGBet_ed

https://t.me/s/official_CatCasino_ed

https://t.me/s/official_Fresh_ed

https://t.me/s/official_JoyCasino_es

https://t.me/s/official_Jet_es

https://t.me/s/official_Drip_ed

https://t.me/s/official_MostBet_ed

https://t.me/s/official_Rox_ed

https://t.me/s/official_Pinco_es

https://t.me/s/official_Daddy_es

https://t.me/s/official_MrBit_es

https://t.me/s/official_Gizbo_ed

https://t.me/s/official_R7_ed

https://t.me/s/official_1xbet_ed

https://t.me/s/official_1xSlots_ed

https://t.me/s/official_Starda_es

https://t.me/s/official_Volna_es

https://t.me/s/official_Stake_es

https://t.me/s/official_Starda_ed

https://t.me/s/official_Kent_es

https://t.me/s/official_Drip_es

https://t.me/s/Volna_egs/20

https://t.me/iGaming_live/4666

https://t.me/R7_egs/6

https://t.me/s/JoyCasino_egs/6

https://t.me/s/Vodka_egs/6

https://t.me/s/Jet_egs/14

https://t.me/Martin_egs/16

https://t.me/s/Kent_egs/9

https://t.me/MrBit_egs/17

https://t.me/MrBit_egs/6

https://t.me/s/CasinoX_egs/8

https://t.me/Pinco_egs/7

https://t.me/s/Stake_egs/16

https://t.me/GGBet_egs/7

https://t.me/Fresh_egs/19

https://t.me/s/MrBit_egs/4

https://t.me/Jet_egs/5

https://t.me/GGBet_egs/19

https://t.me/s/JoyCasino_egs/7

https://t.me/s/R7_egs/19

https://t.me/Pinco_egs/6

https://t.me/s/Martin_egs/8

https://t.me/s/Daddy_egs/20

https://t.me/Vodka_egs/6

https://t.me/Jet_egs/20

https://t.me/Daddy_egs/16

https://t.me/Rox_egs/4

https://t.me/s/Kent_egs/20

https://t.me/Drip_egs/14

https://t.me/Gizbo_egs/18

https://t.me/Sol_egs/10

https://t.me/s/Gama_egs/5

https://t.me/Riobet_egs/16

https://t.me/PinUp_egs/5

https://t.me/s/Rox_egs/19

https://t.me/s/Volna_egs/18

https://t.me/s/Jet_egs/10

https://t.me/Stake_egs/22

https://t.me/s/Fresh_egs/17

https://t.me/va_1xbet/12

https://t.me/iGaming_live/4824

https://t.me/s/va_1xbet/21

https://t.me/va_1xbet/17

https://t.me/s/iGaming_live/4820

https://t.me/va_1xbet/9

https://t.me/s/va_1xbet/7

https://t.me/va_1xbet/21

https://t.me/s/va_1xbet/19

https://t.me/s/va_1xbet/18

https://t.me/va_1xbet/14

https://t.me/va_1xbet

https://t.me/va_1xbet/11

https://t.me/s/va_1xbet/5

https://t.me/s/va_1xbet/10

https://t.me/va_1xbet/7

https://t.me/s/va_1xbet/22

https://t.me/s/va_1xbet/4

https://t.me/s/va_1xbet/15

https://t.me/s/va_1xbet

https://t.me/s/surgut_narashchivaniye_nogtey/5

https://t.me/surgut_narashchivaniye_nogtey/4

https://t.me/surgut_narashchivaniye_nogtey/16

https://t.me/surgut_narashchivaniye_nogtey/9

https://t.me/surgut_narashchivaniye_nogtey/6

https://t.me/ah_1xbet/9

https://t.me/ah_1xbet/8

https://t.me/s/ah_1xbet/7

https://t.me/s/ah_1xbet/17

https://t.me/s/ah_1xbet/12

https://t.me/ah_1xbet/22

https://t.me/s/ah_1xbet/19

https://t.me/s/ah_1xbet/16

https://t.me/s/ah_1xbet/13

https://t.me/ah_1xbet/17

https://t.me/ah_1xbet/6

https://t.me/ah_1xbet/10

https://t.me/s/ah_1xbet/11

https://t.me/ah_1xbet/18

https://t.me/s/ah_1xbet/3

https://t.me/ah_1xbet/21

https://t.me/s/ah_1xbet/6

https://t.me/ah_1xbet/12

https://t.me/s/ah_1xbet/9

https://t.me/ah_1xbet/7

https://t.me/ah_1xbet/20

https://t.me/ah_1xbet/15

https://t.me/s/ah_1xbet/5

https://t.me/s/Best_rating_casino

https://t.me/s/reyting_topcazino/12

https://t.me/reyting_topcazino/14

https://t.me/topcasino_rus/

https://t.me/a_Topcasino/3

https://t.me/a_Topcasino/5

https://t.me/top_ratingcasino/8

https://t.me/a_Topcasino/4

https://t.me/top_ratingcasino/5

https://t.me/a_Topcasino/7

https://t.me/top_ratingcasino/2

https://t.me/top_ratingcasino/6

https://t.me/top_ratingcasino/9

https://t.me/top_ratingcasino/3

https://t.me/top_ratingcasino/4

https://telegra.ph/Top-kazino-11-14-2

https://t.me/kazino_bez_filtrov

https://t.me/s/kazino_bez_filtrov

https://t.me/da_1xbet/8

https://t.me/da_1xbet/9

https://t.me/da_1xbet/3

https://t.me/da_1xbet/5

https://t.me/da_1xbet/11

https://t.me/da_1xbet/15

https://t.me/da_1xbet/6

https://t.me/da_1xbet/14

https://t.me/da_1xbet/7

https://t.me/da_1xbet/2

https://t.me/da_1xbet/4

https://t.me/da_1xbet/13

https://t.me/da_1xbet/12

https://t.me/da_1xbet/10

https://t.me/rq_1xbet/1144

https://t.me/rq_1xbet/613

https://t.me/rq_1xbet/1241

https://t.me/rq_1xbet/829

https://t.me/rq_1xbet/750

https://t.me/s/rq_1xbet/1102

https://t.me/s/rq_1xbet/872

https://t.me/s/rq_1xbet/1077

https://t.me/s/rq_1xbet/1487

https://t.me/s/rq_1xbet/1253

https://t.me/s/rq_1xbet/769

https://t.me/s/reyting_topcazino/26

https://t.me/s/rq_1xbet/1464

https://t.me/s/rq_1xbet/1394

https://t.me/s/rq_1xbet/1152

https://t.me/Official_1xbet1/477

https://t.me/Official_1xbet1/907

https://t.me/s/Official_1xbet1/851

https://t.me/s/Official_1xbet1/1034

https://t.me/Official_1xbet1/936

https://t.me/s/Official_1xbet1/719

https://t.me/Official_1xbet1/597

https://t.me/Official_1xbet1/697

https://t.me/s/Official_1xbet1/324

https://t.me/Official_1xbet1/1087

https://t.me/Official_1xbet1/348

https://t.me/s/Official_1xbet1/200

https://t.me/s/Official_1xbet1/915

https://t.me/Official_1xbet1/1108

https://t.me/Official_1xbet1/403

https://t.me/s/Topcasino_licenziya/16

https://t.me/Topcasino_licenziya/6

https://t.me/Topcasino_licenziya/35

https://t.me/s/Topcasino_licenziya/7

https://t.me/s/Topcasino_licenziya/43

https://t.me/Topcasino_licenziya/45

https://t.me/Topcasino_licenziya/32

https://t.me/s/Topcasino_licenziya/40

https://t.me/Topcasino_licenziya/18

https://t.me/s/kazino_s_licenziei/8

https://t.me/Topcasino_licenziya/52

https://t.me/s/Topcasino_licenziya/36

https://t.me/top_online_kazino/9

https://t.me/s/top_online_kazino/8

https://t.me/s/top_online_kazino/5

https://t.me/top_online_kazino/5

https://t.me/s/top_online_kazino/6

https://t.me/s/top_online_kazino/10

https://t.me/s/reyting_kasino

https://t.me/top_online_kazino/4

https://t.me/top_online_kazino/8

https://t.me/s/top_online_kazino/8

https://t.me/s/top_online_kazino/7

https://t.me/top_online_kazino/7

https://t.me/top_online_kazino/9

http://gassprings.de/__media__/js/netsoltrademark.php?d=https://t.me/s/Official_1xbet_1xbet/1821

https://www.ironbraid.com/?url=https://t.me/s/Official_1xbet_1xbet/1760

https://www.google.com.na/url?sa=t&url=https://t.me/Official_1xbet_1xbet/844

https://cse.google.ro/url?sa=t&url=https://t.me/Official_1xbet_1xbet/1225

http://installersedge.com/__media__/js/netsoltrademark.php?d=https://t.me/s/Official_1xbet_1xbet/1620

https://www.avito.ru/surgut/predlozheniya_uslug/apparatnyy_manikyur_i_pedikyur_s_pokrytiem_4030660549?utm_campaign=native&utm_medium=item_page_ios&utm_source=soc_sharing_seller

http://bravoport.com/__media__/js/netsoltrademark.php?d=https://t.me/s/Official_1xbet_1xbet/991

https://www.avito.ru/surgut/predlozheniya_uslug/apparatnyy_manikyur_i_pedikyur_s_pokrytiem_4030660549?utm_campaign=native&utm_medium=item_page_ios&utm_source=soc_sharing_seller

http://ww17.admin.puyallupschooldistrict.com/__media__/js/netsoltrademark.php?d=https://t.me/s/Official_1xbet_1xbet/621

http://credit-human.com/__media__/js/netsoltrademark.php?d=https://t.me/s/Official_1xbet_1xbet/1315

http://www.google.co.jp/url?q=https://t.me/Official_1xbet_1xbet/393

http://apn.everydaychampagne.biz/__media__/js/netsoltrademark.php?d=https://t.me/s/Official_1xbet_1xbet/476

https://cse.google.co.za/url?sa=t&url=https://t.me/Official_1xbet_1xbet/1295

https://t.me/om_1xbet/3

https://t.me/om_1xbet/10

https://t.me/top_casino_rating_ru/13

https://t.me/om_1xbet/9

https://t.me/om_1xbet/12

https://t.me/om_1xbet/8

https://t.me/s/om_1xbet/13

https://t.me/s/om_1xbet/14

https://t.me/s/om_1xbet/9

https://t.me/top_casino_rating_ru/10

https://t.me/top_casino_rating_ru/15

https://t.me/top_casino_rating_ru/14

https://t.me/om_1xbet/6

https://t.me/om_1xbet/14

https://t.me/top_casino_rating_ru/12

https://t.me/s/om_1xbet/11

https://t.me/om_1xbet/4

https://t.me/s/om_1xbet/7

https://t.me/s/om_1xbet/12

https://t.me/of_1xbet/599

https://t.me/of_1xbet/303

Зарабатывай с 1win прямо сейчас — 1 вин официальный сайт зеркало рабочее обеспечивает круглосуточный доступ к онлайн-казино и ставкам на спорт без блокировок! Получай до 5000? бонусов за депозит, запускай фриспины в слотах, делай лайв-ставки с высокими коэффициентами и быстро выводи выигрыш в личном кабинете — всё для реальных побед и максимальной выгоды!

Турецкие сериалы на русском языке в чем вина фатмагюль 1 сезон — сыграй прямо сейчас в 1win, чтобы насладиться яркими зрелищами и выигрышами! Зарегистрируйся за минуту, получи приветственный бонус до 25000? и 150 фриспинов, делай ставки на спорт с высокими коэффициентами, выигрывай крупные суммы, используй быстрый вывод средств и кешбэк до 10%. В твоем личном кабинете — казино, слоты, лайв-ставки и уникальные промокоды для еще больших бонусов!

Скачать 1вин и начать зарабатывать — просто и быстро! Получи до 100% бонуса за депозит, ставки на спорт с высокими коэффициентами, мгновенные выплаты, фриспины и кэшбэк до 15%, а также доступ к онлайн казино и лайв-ставкам 24/7. Реальные выигрыши, минимальный депозит, личный кабинет с быстрым выводом и шанс выиграть крупные суммы уже сегодня!

Оформи ставку через официальное приложение 1 win — получи мгновенный доступ к онлайн-казино и ставкам на спорт с высокими коэффициентами! Совершай лайв-ставки и играй в слоты, выигрывай крупные призы и используй фриспины и бонусы за депозит до 3000 ?. Быстрый вывод, кэшбэк 10%, удобный личный кабинет и круглосуточная поддержка делают игру максимально выгодной и простой!

Скачай 1win бесплатно на андроид на русском официальное приложение и получи мгновенный доступ к лучшему онлайн казино и ставкам на спорт — делай ставки на лайв-матчи, выигрывай с высокими коэффициентами и получай до 100 фриспинов, бонусы за депозит, промокод на дополнительные бонусы и кэшбэк до 15%. Минимальный депозит — всего 100 рублей, быстрый вывод выигрышей, круглосуточная поддержка и удобный личный кабинет обеспечат реальный выигрыш и удовольствие от игры в любое время!

Rocket x 1win — начните выигрывать прямо сейчас! Получите до 150 фриспинов за депозит, делайте ставки на спорт с высокими коэффициентами до 30% кэшбэка, играйте в онлайн-казино и слоты с мгновенным выводом средств. Простая регистрация, минимальный депозит — и все возможности, чтобы сделать реальный выигрыш в вашем личном кабинете!

Нина и вина бидаши танец 1 урок — начинайте прямо сейчас с 1win! Оцените свои шансы в онлайн-казино и букмекерской линии: вращайте слоты, делайте ставки на спорт, участвуйте в лайв-ставках с высокими коэффициентами, получайте фриспины и бонусы за депозит по промокоду. Быстрый вывод выигрышей, кэшбэк до 12%, минимальный депозит — только у нас; круглосуточный доступ и возможность выиграть реальный приз уже сегодня!

https://t.me/s/ef_beef

https://t.me/s/Official_Ru_1WIN

https://telegra.ph/Beef-kazino-11-25

https://t.me/s/mcasino_martin/391

https://t.me/mcasino_martin/181

https://t.me/Martin_officials

https://t.me/s/Starda_officials

https://t.me/s/Sol_officials

https://t.me/s/martin_officials

https://t.me/s/Legzo_officials

https://t.me/s/lex_officials

https://t.me/s/Drip_officials

https://t.me/s/Volna_officials

https://t.me/s/Irwin_officials

https://t.me/s/Monro_officials

https://t.me/s/Fresh_officials

https://t.me/s/Flagman_officials

https://t.me/s/officials_1GO

https://t.me/s/ROX_officials

https://t.me/s/Gizbo_officials

https://t.me/s/Jet_officials

https://t.me/s/RejtingTopKazino

https://t.me/s/Beefcasino_officials

https://t.me/s/Martin_casino_officials

https://t.me/s/Martin_casino_officials

https://t.me/s/Martin_officials

PHmapaladlogin gets you straight to the games, no fuss. Easy interface and a decent selection of games make it a solid choice. Give it a try and see for yourself! Get playing at phmapaladlogin.

Yo jlcccasino is where it’s at Games are smooth withdrawals are quick Feels legit and fun Come give it a whirl jlcccasino

https://t.me/s/iT_EzcasH

https://t.me/s/it_ezcaSH

https://t.me/ta_1win/185

https://t.me/s/ta_1win/458

https://t.me/s/Russia_Casino_1Win

https://t.me/s/official_1win_official_1win

https://taptabus.ru/1win

https://t.me/s/portable_1WIN