Underwater Photography Noise Removal Denoising Images

Image Fusion

Image fusion is the process of putting together images from different sources with different input qualities so that you can learn something better. It is possible to improve the quality of previously poor images using the image fusion process, particularly in no-reference image quality enhancement techniques. The image that had been damaged was the only thing used to make the inputs and weight measures for the fusion-based strategy. Four-weight maps can make it easier to see things far away when the medium makes it hard to see them because it scatters and absorbs light. Two inputs show how the colours and contrast of the original underwater image or frame have been changed. These are used to get around the things that can’t be done underwater. To use a single image, you don’t need any special tools, to be underwater, or to know how the scene is put together. The Fusion framework helps keep frames in sync with each other in terms of time by keeping edges and reducing noise levels. Real-time applications can now use better images and videos with less noise, better ways to show dark areas, higher global contrast, and better edges and fine details. These changes can also help applications that work in real-time.

Why Image Fusion

The field of multi-sensor data fusion has evolved to the point where it requires more general and formal solutions for various application scenarios. When it comes to image processing, there are times when you need an image that contains a great deal of spatial information as well as a great deal of spectral information. Knowing this is essential for work involving remote sensing. However, the instruments cannot provide this information because of how they were constructed or utilised. Data fusion is one approach that can be taken to address this issue.

Benefits of Image Fusion

Image fusion has several advantages in image processing applications, some of which are listed below.

- High accuracy

- High Reliability

- Fast acquisition of information

- Cost-effective.

There is a significant difference between the atmosphere above and outside the water and the atmosphere above and below the water. The colours blue and green predominate in the majority of the photographs that are produced by underwater photography. It isn’t easy to see things underwater due to the physical characteristics of the environment, for example. Because light is attenuated when it passes through water, images captured while underwater is not as crisp. As the distance and depth increase, the light becomes dimmer and dimmer due to absorption and scattering processes. When light is scattered, its path is altered, but it loses a significant amount of the energy that makes it visible when absorbed. There is less contrast in the scene due to a small amount of light being scattered back from the medium along the line of sight. The underwater medium creates scenes with low contrast, giving the impression that things in the distance are shrouded in mist. In the water of a typical sea, it is difficult to differentiate between things that are longer than 10 metres; as the water gets deeper, the colours become less vibrant. Additionally, it is difficult to tell the difference between things that are longer than 10 metres.

The three main parts of an enhancing strategy are the definition of weight measures, the multi-scale fusion of the inputs, weight measures, and the assignment of inputs (which involves deriving the inputs from the original underwater image).<

Inputs

For fusion algorithms to work well, they need well-fitted inputs and weights. The fusion method differs from most others because it only uses one damaged image (but none designed for underwater scenes). Image fusion combines two or more images while keeping their most important parts.

Weight measures

The weight measurements must consider how the output will look after it has been fixed. We argue that image restoration is closely related to how colours look. This makes it hard to use simple per-pixel blending to combine measurable values like salient features, local and global contrast, and exposedness without making artefacts. Images with more pixels that are heavier. The laplacian weight, the local contrast weight, and the saliency weight are considered.

Fusion

The improved version of the image is obtained by fusing the defined inputs with the weight measures at each pixel location. This results in an enhanced version of the image.

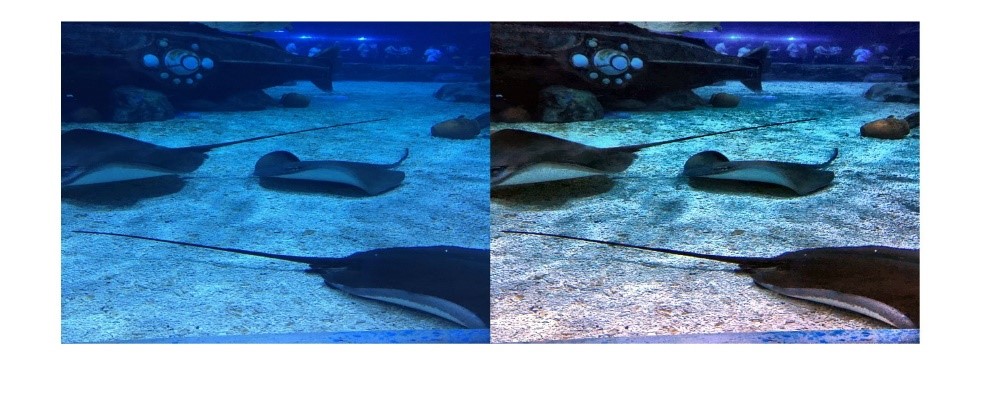

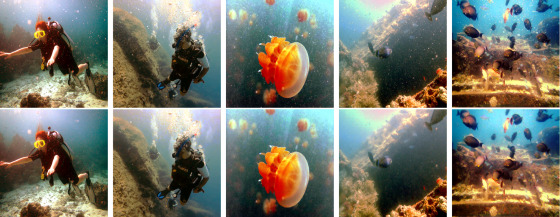

The methodology is applied to numerous underwater images in the experiment, and the performance is tested. The images for the experiments are collected from the Underwater Image Enhancement benchmark dataset (UIEB). The UIEB comprises two subsets: the first contains 890 raw underwater images and high-quality reference images, and the second contains 60 challenging underwater images. Figure 1 presents a selection of underwater images and the results obtained by applying the methodology discussed previously to conduct a qualitative evaluation. The images on the left side of Figure 1 are blurry, and most things under the water can’t be seen clearly. So, the object detection programmes couldn’t find the smaller things in the image, making recognising things harder. The fusion process took the haze out of the picture, and now you can see even the smallest objects and other particles that were hidden in the picture’s background. Because the images made by this pre-processing method are so good, they can be used in real-time applications.

The fusion technique that is used to improve underwater images can be applied in a variety of contexts. Most applications implement this fusion procedure as a pre-processing strategy to enhance the quality of underwater images. Two of the applications are mentioned here, and how they are used in real-world.

Fish detection and tracking

There is a lot of software for Android and iOS devices that can help you identify fish. This software can be your tour guide through the world of fish. Many different kinds of people, from anglers to scuba divers, can use these apps for different tasks. These apps have a lot of pictures and specific information about each fish, like how deep you should dive and where you should go to catch the most fish. You’ll be glad to know that there are apps for both iOS and Android that can help you identify a fish right away. A few of these mobile applications are picture fish, FishVerify, Fishidy, FishBrain etc.

Coral-reef monitoring

Coral reefs keep beaches safe from storms and erosion, create jobs for locals, and give people a place to play. They can also be used to make new foods and medicines. Reefs provide food, income, and shelter for more than 500 million people. Local businesses make hundreds of millions of dollars from people who fish, dive, and snorkel on and near reefs. It is thought that the net economic value of the world’s coral reefs is close to tens of billions of dollars. Underwater Coral Reef is a beautiful, easy-to-use mobile application that lets you customise your device. Underwater Coral Reef is compatible with almost all devices, doesn’t need to be connected to the Internet all the time, uses little battery, and has simple settings for the user interface.

In addition to these applications, the image enhancement strategy’s fusion procedure is used in sea cucumber identification, pipeline monitoring, and other underwater object detection and identification applications.

test a form

https://t.me/s/Online_1_xbet/3570

https://t.me/s/Official_1xbet_1xbet

https://t.me/s/rating_online/3

https://t.me/s/rating_online/5

https://t.me/rating_online/3

https://t.me/rating_online/6

https://t.me/s/rating_online/7

https://t.me/s/rating_online/8

https://t.me/s/rating_online/9

https://t.me/rating_online/2

https://t.me/Online_1_xbet/3379

https://t.me/Online_1_xbet/2334

https://t.me/Online_1_xbet/2385

https://t.me/Online_1_xbet/3097

https://t.me/Online_1_xbet/2387

https://t.me/Online_1_xbet/3297

https://t.me/Online_1_xbet/3077

https://t.me/Online_1_xbet/3144

https://t.me/Online_1_xbet/1845

https://t.me/Official_1xbet_1xbet/s/290

https://t.me/Official_1xbet_1xbet/s/1205

https://t.me/Official_1xbet_1xbet/s/217

https://t.me/Official_1xbet_1xbet/s/1089

https://t.me/Official_1xbet_1xbet/s/424

https://t.me/Official_1xbet_1xbet/s/1014

https://t.me/Official_1xbet_1xbet/s/368

https://t.me/Official_1xbet_1xbet/s/690

https://t.me/Official_1xbet_1xbet/s/1155

https://t.me/Official_1xbet_1xbet/s/651

https://t.me/Official_1xbet_1xbet/s/592

https://t.me/Official_1xbet_1xbet/s/1196

https://t.me/Official_1xbet_1xbet/s/1314

https://t.me/Official_1xbet_1xbet/s/698

https://t.me/Official_1xbet_1xbet/s/1214

https://t.me/Official_1xbet_1xbet/s/936

https://t.me/Official_1xbet_1xbet/s/418

https://t.me/Official_1xbet_1xbet/s/489

https://t.me/Official_1xbet_1xbet/s/324

https://t.me/Official_1xbet_1xbet/s/1336

https://t.me/Official_1xbet_1xbet/s/914

https://t.me/Official_1xbet_1xbet/s/427

https://t.me/Official_1xbet_1xbet/s/959

https://t.me/Official_1xbet_1xbet/s/820

https://t.me/Official_1xbet_1xbet/s/1378

https://t.me/Official_1xbet_1xbet/s/429

https://t.me/Official_1xbet_1xbet/s/680

https://t.me/Official_1xbet_1xbet/s/288

https://t.me/Official_1xbet_1xbet/s/1104

https://t.me/Official_1xbet_1xbet/s/1514

https://t.me/Official_1xbet_1xbet/s/1101

https://t.me/Official_1xbet_1xbet/s/276

https://t.me/Official_1xbet_1xbet/s/466

https://t.me/Official_1xbet_1xbet/s/854

https://t.me/Official_1xbet_1xbet/s/1536

https://t.me/Official_1xbet_1xbet/s/894

https://t.me/Official_1xbet_1xbet/s/1057

https://t.me/Official_1xbet_1xbet/s/191

https://t.me/s/Official_1xbet_1xbet/1752

https://t.me/s/Official_1xbet_1xbet/1618

https://t.me/Official_1xbet_1xbet/1734

https://t.me/s/Official_1xbet_1xbet/1754

https://t.me/Official_1xbet_1xbet/1826

https://t.me/Official_1xbet_1xbet/1733

https://t.me/s/Official_1xbet_1xbet/1675

https://t.me/s/Official_1xbet_1xbet/1779

https://t.me/Official_1xbet_1xbet/1754

https://t.me/Official_1xbet_1xbet/1600

https://t.me/s/Official_1xbet_1xbet/1743

https://t.me/s/Official_1xbet_1xbet/1853

https://t.me/Official_1xbet_1xbet/1785

https://t.me/s/Official_1xbet_1xbet/1669

https://t.me/Official_1xbet_1xbet/1791

https://t.me/s/Official_1xbet_1xbet/1600

https://t.me/s/Official_1xbet_1xbet/1840

https://t.me/s/Official_1xbet_1xbet/1657

https://t.me/Official_1xbet_1xbet/1752

https://t.me/Official_1xbet_1xbet/1597

https://t.me/s/Official_1xbet_1xbet/1725

https://t.me/s/Official_1xbet_1xbet/1760

https://t.me/Official_1xbet_1xbet/1610

https://t.me/s/Official_1xbet_1xbet/1661

https://t.me/s/Official_1xbet_1xbet/1702

https://t.me/Official_1xbet_1xbet/1764

https://t.me/Official_1xbet_1xbet/1841

https://t.me/s/Official_1xbet_1xbet/1595

https://t.me/Official_1xbet_1xbet/1776

https://t.me/Official_1xbet_1xbet/1769

https://t.me/Official_1xbet_1xbet/1656

https://t.me/Official_1xbet_1xbet/1609

https://t.me/Official_1xbet_1xbet/1718

https://t.me/s/Official_1xbet_1xbet/1834

https://t.me/Official_1xbet_1xbet/1730

https://t.me/s/Official_1xbet_1xbet/1841

https://t.me/Official_1xbet_1xbet/1643

https://t.me/s/Official_1xbet_1xbet/1806

https://t.me/Official_1xbet_1xbet/1618

https://t.me/s/Official_1xbet_1xbet/1616

https://t.me/s/Official_1xbet_1xbet/1798

https://t.me/s/Official_1xbet_1xbet/1797

https://t.me/Official_1xbet_1xbet/1642

https://t.me/s/Official_1xbet_1xbet/1811

https://t.me/Official_1xbet_1xbet/1819

https://t.me/s/Official_1xbet_1xbet/1817

https://t.me/s/Official_1xbet_1xbet/1763

https://t.me/Official_1xbet_1xbet/1611

https://t.me/Official_1xbet_1xbet/1853

https://t.me/Official_1xbet_1xbet/1740

https://t.me/Official_1xbet_1xbet/1799

https://t.me/Official_1xbet_1xbet/1649

https://t.me/Official_1xbet_1xbet/1602

https://t.me/s/Official_1xbet_1xbet/1697

https://t.me/Official_1xbet_1xbet/1781

https://t.me/Official_1xbet_1xbet/1778

https://t.me/s/Official_1xbet_1xbet/1644

https://t.me/s/Official_1xbet_1xbet/1851

https://t.me/s/Official_1xbet_1xbet/1757

https://t.me/s/Official_1xbet_1xbet/1638

https://t.me/Official_1xbet_1xbet/1794

https://t.me/s/Official_1xbet_1xbet/1744

https://t.me/s/topslotov

[https://t.me/s/official_1win_aviator](https://t.me/s/official_1win_aviator)

https://t.me/s/reiting_top10_casino/10

https://t.me/s/reiting_top10_casino/9

https://t.me/reiting_top10_casino/4

https://t.me/s/reiting_top10_casino/4

https://t.me/reiting_top10_casino/3

https://t.me/s/reiting_top10_casino/3

https://t.me/s/reiting_top10_casino

https://t.me/reiting_top10_casino/10

https://t.me/reiting_top10_casino/7

https://t.me/s/reiting_top10_casino/2

https://t.me/reiting_top10_casino

https://t.me/reiting_top10_casino/2

https://t.me/reiting_top10_casino/6

https://t.me/s/reiting_top10_casino/8

https://t.me/reiting_top10_casino/5

https://t.me/reiting_top10_casino/8

https://t.me/s/reiting_top10_casino/5

https://t.me/s/reiting_top10_casino/6

https://t.me/s/reiting_top10_casino/7

https://t.me/reiting_top10_casino/9

https://t.me/s/Gaming_1xbet

https://t.me/s/PlayCasino_1win

https://t.me/s/PlayCasino_1xbet

https://t.me/s/PlayCasino_1win

https://t.me/s/PlayCasino_1xbet

https://t.me/s/ofitsialniy_1win/33/kes

https://t.me/s/ofitsialniy_1win

https://t.me/s/iw_1xbet

https://t.me/s/Official_beefcasino

https://t.me/s/bs_1xbet/24

https://t.me/s/bs_1xbet/44

https://t.me/s/bs_1xbet/35

https://t.me/bs_1xbet/10

https://t.me/s/bs_1xbet/41

https://t.me/s/bs_1xbet/38

https://t.me/s/bs_1xbet/21

https://t.me/bs_1xbet/45

https://t.me/s/bs_1xbet/22

https://t.me/s/bs_1xbet/12

https://t.me/bs_1xbet/11

https://t.me/s/bs_1xbet/9

https://t.me/bs_1xbet/51

https://t.me/bs_1xbet/44

https://t.me/s/bs_1xbet/30

https://t.me/s/bs_1xbet/37

https://t.me/s/bs_1xbet/25

https://t.me/s/bs_1xbet/16

https://t.me/s/bs_1xbet/11

https://t.me/s/bs_1xbet/22

https://t.me/bs_1xbet/18

https://t.me/bs_1xbet/30

https://t.me/bs_1xbet/45

https://t.me/s/bs_1xbet/28

https://t.me/bs_1xbet/12

https://t.me/bs_1xbet/36

https://t.me/s/bs_1xbet/7

https://t.me/bs_1xbet/16

https://t.me/s/bs_1xbet/20

https://t.me/s/bs_1xbet/51

https://t.me/s/bs_1xbet/43

https://t.me/s/bs_1xbet/29

https://t.me/bs_1xbet/32

https://t.me/s/bs_1xbet/2

https://t.me/bs_1xbet/17

https://t.me/bs_1xbet/34

https://t.me/s/bs_1xbet/14

https://t.me/bs_1xbet/29

https://t.me/bs_1xbet/16

https://t.me/bs_1xbet/17

https://t.me/s/bs_1xbet/21

https://t.me/s/jw_1xbet/212

https://t.me/s/jw_1xbet/889

https://t.me/jw_1xbet/333

https://t.me/s/jw_1xbet/704

https://t.me/jw_1xbet/972

https://t.me/s/jw_1xbet/299

https://t.me/s/bs_1Win/818

https://t.me/bs_1Win/433

https://t.me/bs_1Win/931

https://t.me/s/bs_1Win/1185

https://t.me/bs_1Win/782

https://t.me/bs_1Win/695

https://t.me/s/bs_1Win/601

https://t.me/bs_1Win/982

https://t.me/bs_1Win/1106

https://t.me/s/bs_1Win/587

https://t.me/s/bs_1Win/794

https://t.me/bs_1Win/1133

https://t.me/Official_mellstroy_casino/12

https://t.me/Official_mellstroy_casino/45

https://t.me/Beefcasino_rus/13

https://t.me/Official_mellstroy_casino/31

https://t.me/s/Official_mellstroy_casino/16

https://t.me/Official_mellstroy_casino/36

https://t.me/Official_mellstroy_casino/7

https://t.me/s/Official_mellstroy_casino/57

https://t.me/s/Official_mellstroy_casino/53

https://t.me/s/Official_mellstroy_casino/31

https://t.me/Official_mellstroy_casino/24

https://t.me/s/Official_mellstroy_casino/45

https://t.me/s/Official_mellstroy_casino/54

https://t.me/Best_promocode_rus/1858

https://t.me/Best_promocode_rus/2086

https://t.me/Beefcasino_rus/57

https://t.me/s/ud_Pokerdom/58

https://t.me/s/ud_JoyCasino/54

https://t.me/ud_Booi/59

https://t.me/s/ud_Vodka/45

https://t.me/s/ud_Vulkan/47

https://t.me/ud_Kometa/51

https://t.me/s/ud_GGBet/61

https://t.me/ud_Martin/44

https://t.me/s/ud_Martin/54

https://t.me/s/ud_Leon/44

https://t.me/s/?ud_1Go/47

https://t.me/s/ud_Booi/44

https://t.me/ud_Martin/60

https://t.me/s/ud_Vulkan/53

https://t.me/s/ud_1xSlots/52

https://t.me/s/ud_Monro/56

https://t.me/Beefcasino_rus/59

https://t.me/ud_Gizbo/54

https://t.me/s/?ud_1Go/56

https://t.me/s/ud_Legzo/63

https://t.me/s/ud_Pinco/54

https://t.me/s/ud_Pokerdom/57

https://t.me/ud_Legzo/49

https://t.me/s/ud_MostBet/56

https://t.me/ud_Daddy/57

https://t.me/s/ud_Jet/45

https://t.me/s/ud_Irwin/55

https://t.me/s/ud_DragonMoney/33

https://t.me/s/ud_Drip/37

https://t.me/ud_Lex/20

https://t.me/s/UD_jEt

https://t.me/s/ud_voDkA

https://t.me/s/uD_soL

https://t.me/s/ud_MarTin

https://t.me/s/Beefcasino_rus

https://t.me/s/Official_mellstroy_casino

https://t.me/s/Ud_GiZbo

https://t.me/official_1win_aviator/38

https://t.me/s/uD_MOSTBEt

https://t.me/s/uD_daddy

https://t.me/s/official_1win_aviator/39

https://t.me/s/ud_StaKe

https://t.me/s/uD_dRagonMOneY

https://t.me/s/Ud_rIoBet

https://t.me/s/uD_CASinO_X

https://t.me/s/ud_JoycaSino

https://t.me/s/tf_1win

https://t.me/s/tf_1win

https://t.me/s/kta_1win

https://t.me/s/Top_bk_ru

https://t.me/s/kfo_1win

https://t.me/s/UD_VODKA

https://t.me/s/Ud_gAMa

https://t.me/s/Ud_CatCasINo

https://t.me/s/uD_mArTIN

https://t.me/s/Ud_joYCASino

https://t.me/s/uD_fLAgmAn

https://t.me/s/UD_vULKAn

https://t.me/s/UD_gGbET

https://t.me/s/ud_riObet

https://t.me/s/uD_1xbeT

https://t.me/s/ud_rox

https://t.me/s/UD_pokeRdOM

https://t.me/s/UD_DriP

https://t.me/s/ke_Starda

https://t.me/s/ke_1Win

https://t.me/s/ke_Gama

https://t.me/official_1win_aviator/172

https://t.me/s/ke_Sol

https://t.me/s/ke_Stake

https://t.me/s/ke_Fresh

https://t.me/s/ke_Leon

https://t.me/s/ke_MrBit

https://t.me/s/ke_kent

https://t.me/s/ke_Daddy

https://t.me/s/ke_Jet

https://t.me/s/ke_DragonMoney

https://t.me/s/ke_Drip

https://t.me/s/ke_Pin_Up

https://t.me/s/ke_Booi

https://t.me/s/ke_1xbet

https://t.me/s/kef_Rox

https://t.me/s/kef_R7

https://t.me/s/ke_MostBet

https://t.me/s/ke_Volna

https://t.me/s/ke_Flagman

https://t.me/s/ke_Casino_X

https://t.me/s/ke_mellstroy

https://t.me/s/ke_Pokerdom

https://t.me/s/ke_Kometa

https://t.me/s/official_1win_aviator/548

https://t.me/s/ke_CatCasino

https://t.me/s/ke_Irwin

https://t.me/official_1win_aviator/101

https://t.me/s/ke_Izzi

https://t.me/s/ke_Vulkan

https://t.me/s/ke_Legzo

https://t.me/s/ke_1xSlots

https://t.me/s/top_kazino_z

https://t.me/s/topcasino_v_rossii

https://t.me/a_Top_onlinecasino/14

https://t.me/s/a_Top_onlinecasino/20

https://t.me/a_Top_onlinecasino/3

https://t.me/a_Top_onlinecasino/5

https://t.me/a_Top_onlinecasino/16

https://t.me/s/a_Top_onlinecasino/8

https://t.me/s/a_Top_onlinecasino/2

https://t.me/s/a_Top_onlinecasino/19

https://t.me/a_Top_onlinecasino/20

https://t.me/s/a_Top_onlinecasino/17

https://t.me/s/a_Top_onlinecasino/7

https://t.me/topcasino_rus/

https://t.me/s/official_Booi_ed

https://t.me/s/official_Flagman_edxjqqc

https://t.me/s/official_Jet_ed

https://t.me/s/official_Stake_ed

https://t.me/s/official_Rox_ed

https://t.me/s/official_Vodka_ed

https://t.me/s/official_R7_es

https://t.me/s/official_Vodka_es

https://t.me/s/official_1Win_es

https://t.me/s/official_Vulkan_ed

https://t.me/s/official_Rox_es

https://t.me/s/official_Sol_es

https://t.me/s/official_Starda_es

https://t.me/s/official_1xbet_es

https://t.me/s/official_GGBet_es

https://t.me/s/official_Lex_es

https://t.me/s/official_Monro_es

https://t.me/s/official_CatCasino_ed

https://t.me/s/official_Gizbo_es

https://t.me/s/official_Fresh_es

https://t.me/s/official_Pokerdom_es

https://t.me/s/official_Kometa_es

https://t.me/s/official_Stake_es

https://t.me/s/official_PlayFortuna_ed

https://t.me/s/official_1Go_ed

https://t.me/s/official_PinUp_ed

https://t.me/s/official_Leon_ed

https://t.me/s/iGaming_live/4651

https://t.me/Stake_egs/14

https://t.me/Martin_egs/3

https://t.me/Starda_egs/13

https://t.me/Irwin_egs/22

https://t.me/s/PlayFortuna_egs/19

https://t.me/s/CasinoX_egs/12

https://t.me/Flagman_egs/10

https://t.me/Volna_egs/17

https://t.me/s/Vodka_egs/7

https://t.me/Martin_egs/6

https://t.me/s/Sol_egs/11

https://t.me/s/Leon_egs/22

https://t.me/CatCasino_egs/4

https://t.me/Starda_egs/3

https://t.me/s/Vodka_egs/18

https://t.me/s/Kent_egs/8

https://t.me/s/Volna_egs/19

https://t.me/Leon_egs/22

https://t.me/s/CasinoX_egs/6

https://t.me/GGBet_egs/13

https://t.me/s/Kometa_egs/13

https://t.me/s/Pokerdom_egs/12

https://t.me/PinUp_egs/12

https://t.me/s/PinUp_egs/11

https://t.me/iGaming_live/4654

https://t.me/va_1xbet/19

https://t.me/s/va_1xbet/9

https://t.me/va_1xbet/22

https://t.me/va_1xbet/4

https://t.me/va_1xbet/5

https://t.me/s/va_1xbet/17

https://t.me/va_1xbet/24

https://t.me/s/va_1xbet/13

https://t.me/s/va_1xbet/22

https://t.me/s/va_1xbet/3

https://t.me/s/va_1xbet

https://t.me/va_1xbet/7

https://t.me/s/va_1xbet/12

https://t.me/va_1xbet

https://t.me/s/surgut_narashchivaniye_nogtey/17

https://t.me/s/surgut_narashchivaniye_nogtey/15

https://t.me/s/surgut_narashchivaniye_nogtey

https://t.me/s/rating_online

https://t.me/ah_1xbet/21

https://t.me/ah_1xbet/9

https://t.me/s/ah_1xbet/12

https://t.me/s/ah_1xbet/20

https://t.me/s/ah_1xbet/7

https://t.me/ah_1xbet/3

https://t.me/ah_1xbet/10

https://t.me/ah_1xbet/19

https://t.me/s/ah_1xbet/17

https://t.me/ah_1xbet/11

https://t.me/ah_1xbet/5

https://t.me/s/Best_rating_casino

https://t.me/reyting_topcazino/16

https://t.me/topcasino_rus/

https://t.me/top_ratingcasino/6

https://t.me/a_Topcasino/9

https://t.me/top_ratingcasino/2

https://t.me/a_Topcasino/6

https://t.me/a_Topcasino/4

https://t.me/a_Topcasino/2

https://t.me/a_Topcasino/5

https://t.me/top_ratingcasino/5

https://t.me/top_ratingcasino/7

https://telegra.ph/Top-kazino-11-14-2

https://t.me/s/kazino_bez_filtrov

https://t.me/kazino_bez_filtrov

https://t.me/da_1xbet/7

https://t.me/da_1xbet/5

https://t.me/da_1xbet/2

https://t.me/da_1xbet/12

https://t.me/da_1xbet/9

https://t.me/da_1xbet/3

https://t.me/da_1xbet/4

https://t.me/da_1xbet/15

https://t.me/da_1xbet/13

https://t.me/da_1xbet/10

https://t.me/da_1xbet/11

https://t.me/rq_1xbet/1156

https://t.me/rq_1xbet/1022

https://t.me/rq_1xbet/756

https://t.me/s/rq_1xbet/935

https://t.me/s/rq_1xbet/1348

https://t.me/s/rq_1xbet/993

https://t.me/s/reyting_topcazino/25

https://t.me/s/rq_1xbet/936

https://t.me/s/rq_1xbet/1030

https://t.me/s/Official_1xbet1/1345

https://t.me/Official_1xbet1/82

https://t.me/Official_1xbet1/827

https://t.me/s/Official_1xbet1/861

https://t.me/Official_1xbet1/770

https://t.me/s/Official_1xbet1/637

https://t.me/s/Official_1xbet1/385

https://t.me/Official_1xbet1/1267

https://t.me/s/kazino_s_licenziei/4

https://t.me/Topcasino_licenziya/23

https://t.me/s/kazino_s_licenziei/12

https://t.me/s/Topcasino_licenziya/28

https://t.me/s/Topcasino_licenziya/48

https://t.me/kazino_s_licenziei/4

https://t.me/top_online_kazino/10

https://t.me/s/top_online_kazino/9

https://t.me/s/top_online_kazino/4

https://t.me/s/top_online_kazino/7

https://t.me/s/reyting_kasino

https://t.me/top_online_kazino/7

https://t.me/s/top_online_kazino/6

https://t.me/s/reyting_luchshikh_kazino

http://theboardreport.org/__media__/js/netsoltrademark.php?d=https://t.me/s/Official_1xbet_1xbet/884

http://westsidestorythemovie.com/__media__/js/netsoltrademark.php?d=https://t.me/s/Official_1xbet_1xbet/892

http://ww31.tuner-skyhd.com/__media__/js/netsoltrademark.php?d=https://t.me/s/Official_1xbet_1xbet/463

https://www.avito.ru/surgut/predlozheniya_uslug/apparatnyy_manikyur_i_pedikyur_s_pokrytiem_4030660549?utm_campaign=native&utm_medium=item_page_ios&utm_source=soc_sharing_seller

https://clients1.google.rs/url?sa=t&url=https://t.me/Official_1xbet_1xbet/1361

https://maps.google.hn/url?q=https://t.me/Official_1xbet_1xbet/608

http://images.google.mv/url?q=https://t.me/Official_1xbet_1xbet/485

https://t.me/om_1xbet/14

https://t.me/top_casino_rating_ru/12

https://t.me/s/om_1xbet/4

https://t.me/s/om_1xbet/14

https://t.me/top_casino_rating_ru/11

https://t.me/s/om_1xbet/5

https://t.me/om_1xbet/11

https://t.me/om_1xbet/6

https://t.me/om_1xbet/12

https://t.me/om_1xbet/13

https://t.me/of_1xbet/750

Сколько нужно яблок для 1 литра вина? Узнайте прямо сейчас и переходите к выигрышу с 1win — онлайн-казино, где вас ждут быстрые выплаты, до 100% бонусов за депозит, фриспины и ставки на спорт с высокими коэффициентами. Регистрация легкая, минимальный депозит всего 100 рублей, гаджеты в личном кабинете всегда под рукой, а круглосуточный доступ и быстрый вывод средств гарантируют максимально выгодный опыт.

1win сайт зеркало онлайн — заходи прямо сейчас и получай мгновенный доступ к лучшим онлайн-казино и ставкам на спорт! Наслаждайся высокими коэффициентами, фриспинами и бонусами за депозит до 100%, делай лайв-ставки, используй промокод для кэшбэка до 15%, а быстрый вывод и личный кабинет обеспечивают реальные выигрыши и круглосуточную доступность. Максимизируй свой выигрыш вместе с 1win — минимальный депозит от 100 рублей, простая регистрация и быстрые выплаты уже ждут тебя!

Велик шанс выиграть — ознакомьтесь с вин номер опель корса 1 4! Зарегистрируйтесь в 1win и получите бонус до 5000?, фриспины и большие кэшкабы, делайте ставки на спорт, пользуйтесь лайв-ставками и быстрым выводом средств — всё для реальных выигрышей и круглосуточного доступа к любимым азартам!

В чем вина фатмагюль онлайн 1 серия? Открой личный кабинет в 1win и убедись сам — здесь для тебя лучшие бонусы за депозит до 5000?, фриспины и кэшбэк до 15%. Играйте в онлайн казино, делайте ставки на спорт с высокими коэффициентами и получайте быстрый вывод выигрышей в круглосуточном режиме — всё для максимальной выгоды и яркого развлечения.

https://t.me/s/ef_beef

https://t.me/s/Official_Ru_1WIN

https://telegra.ph/Beef-kazino-11-25

https://t.me/Martin_officials

https://t.me/s/Volna_officials

https://t.me/s/Starda_officials

https://t.me/s/Gizbo_officials

https://t.me/s/martin_officials

https://t.me/s/ROX_officials

https://t.me/s/Sol_officials

https://t.me/s/lex_officials

https://t.me/s/Drip_officials

https://t.me/s/Fresh_officials

https://t.me/s/Legzo_officials

https://t.me/s/Flagman_officials

https://t.me/s/RejtingTopKazino

https://t.me/s/Beefcasino_officials

https://t.me/s/Martin_casino_officials

https://t.me/s/ef_beef

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

http://images.google.ki/url?q=https://t.me/s/officials_7k/1122

https://t.me/s/IT_EZCasH

https://t.me/s/ta_1win/974

https://t.me/s/russia_casino_1win

https://t.me/s/official_1win_official_1win

Yo, who doesn’t love a free bet, right? freebetnaseubet looks like they’re hooking people up with some decent deals. Definitely worth poking around on the site to see what’s up. freebetnaseubet

Yo, 788betvip! Heard some buzz about this site. Gonna give it a whirl and see if I can snag some winnings. Hope the odds are good and the withdrawals are smooth! Check it out yourself: 788betvip

Ready to win big? 988betlogin looks promising. The login process seems straightforward. Worth a shot, right? Get yourself logged in 988betlogin.

https://taptabus.ru/1win

https://t.me/s/portable_1WIN